Soundstage Is (Much) More Complicated Than You Think

Many listeners end up disappointed upon hearing the best headphone soundstage, realizing it's not as big a game-changer as they'd expected. Join listener as they discuss why soundstage is more complicated than we think, and how audiophiles can better manage their expectations and understand this elusive quality.

“Soundstage” might be the most ubiquitous term in the audiophile lexicon, and it’s pervasive enough that it’s the only term to have a consistent foothold in the minds of non-audiophiles. I almost never see tech youtubers, studio engineers, or gamers talking about plankton or microdynamics, but I see nearly all of them saying they want “good soundstage.”

Even if the term soundstage means different things to different people, I think the core idea can be summarized fairly neatly. When listening to headphones the sound occupies a finite space, and the shape of this space as well as the extent to which it extends past the bounds of your head is what audiophiles refer to as “soundstage” in headphones.

I’ve seen many people—non-audiophiles and audiophiles alike—take this definition to hyperbolic extremes, saying that headphones with good soundstage can sound “like speakers.” Some even say that good soundstage can even make music sound like it’s “being played by musicians in the room with you.”

But that’s… not really how headphones work.

Building an understanding regarding how they do work—and why soundstage is way more complicated than most people think—is the best way I can think of to help soundstage enjoyers predict what kind of headphone might be best for them. More importantly, it’ll help new audiophiles ground their expectations when it comes to headphones that are widely claimed by others to have “good soundstage.”

Because many people—myself included—end up disappointed when they finally hear the best soundstage headphones have to offer. Why? Let’s get into it.

Many of the concepts and information put forth in this article were originally featured in my review of the Grell OAE-1.

Localization, a.k.a. “Actual Soundstage”

Before I go deeper, I want to make sure that people know that a lot of the contents of this section have been covered in my article about Diffuse Field, which will help readers understand the terminology used in this section, as well as understand why Headphones.com—as well as people like Crinacle and Sean Olive—use Diffuse Field as the necessary anatomical baseline (not a target response on its own) for evaluating headphones and IEMs.

Humans have an incredibly complex hearing system, and our ability to orient ourselves within our world is largely thanks to how this system works. Even with our eyes closed, we can unpack the sounds emitted by sound sources in the world to determine how far or near they are, what direction they’re coming from, and potentially even how big they are.

People generally enjoy when our hearing system is engaged with audio delivery systems like “binaural audio” and “surround sound.” Both take advantage of our hearing system using different approaches to deliver immersive—and at times even hyper-realistic—audio content by placing multiple sound sources around the listener.

Fig. 1: Neumann KU100 being used for drum recording. Credit: Neumann

Binaural recordings are made with a dummy head microphone. This is different from a traditional microphone in that it replicates the effects a human listener’s head would typically have on incoming sounds. We’ll talk more about these effects later.

In this case, the dummy head microphone serves as a stand-in for where the listener’s head would be, and when we listen to binaural recordings wearing properly equalized headphones, we can get a fairly convincing sense of our head being where the dummy head was, or even of the recorded sound source(s) being placed in the same space we are.

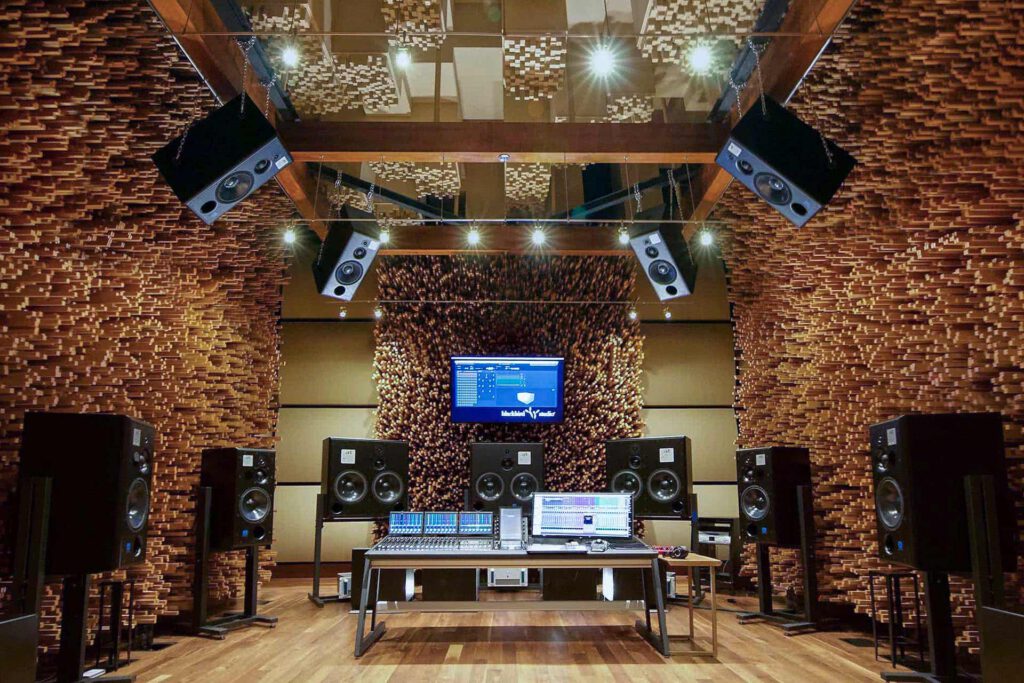

Fig. 2: Blackbird Studio C, configured with surround sound speakers for mixing in Dolby Atmos. Credit: Blackbird Studio

Surround sound is even simpler, in that it actually is made up of sound sources placed around the listener. The sense of objects producing sound around us is completely natural, because the sound sources are actually interacting with our anatomy the same way any sound in the world would.

These delivery systems provide the most exciting version of what I’ll call “actual soundstage” in the world of recorded audio content, and it’s worth unpacking how and why they work because of how engaging and immersive they can be.

So to build that understanding, let’s talk about the critical factors that make up human localization (a.k.a. the ability to locate sound sources in space, solely based on their sound), because the human localization system being properly accounted for is why surround sound and binaural audio are so fun to experience when done right.

Interaural Time Difference (ITD)

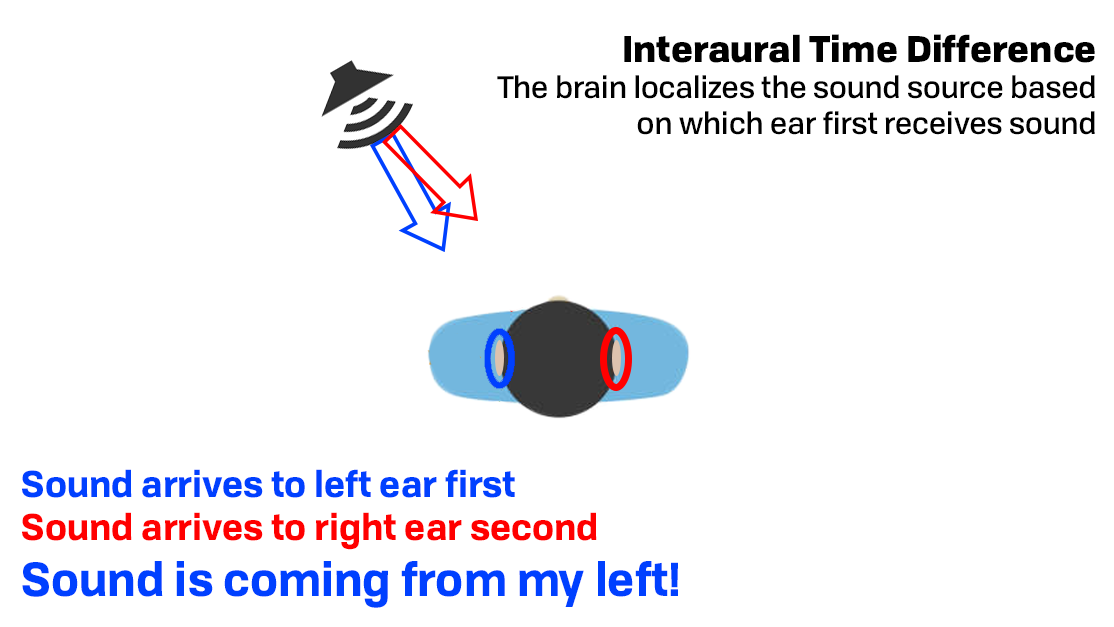

Fig. 3: Interaural Time Difference explained

Most of us have two ears. Our brain is constantly unpacking the incoming sound differences between them to determine where sound sources are located. Part of this includes unpacking Interaural Time Difference (ITD), or the difference in a sound’s arrival time between our two ears.

As shown in the example above, if a sound arrives at one ear before the other, our perception of the sound will be biased to whichever side received the sound first.

We’re quite good at hearing the difference in a sound’s arrival time between our two ears, but we don’t necessarily consciously notice it. ITD is part of the larger localization process handled by the brain unconsciously, so instead of hearing “the sound is arriving 15 milliseconds earlier to my left ear than my right ear,” we often hear “the sound is coming from my left.”

Interaural Level Difference (ILD)

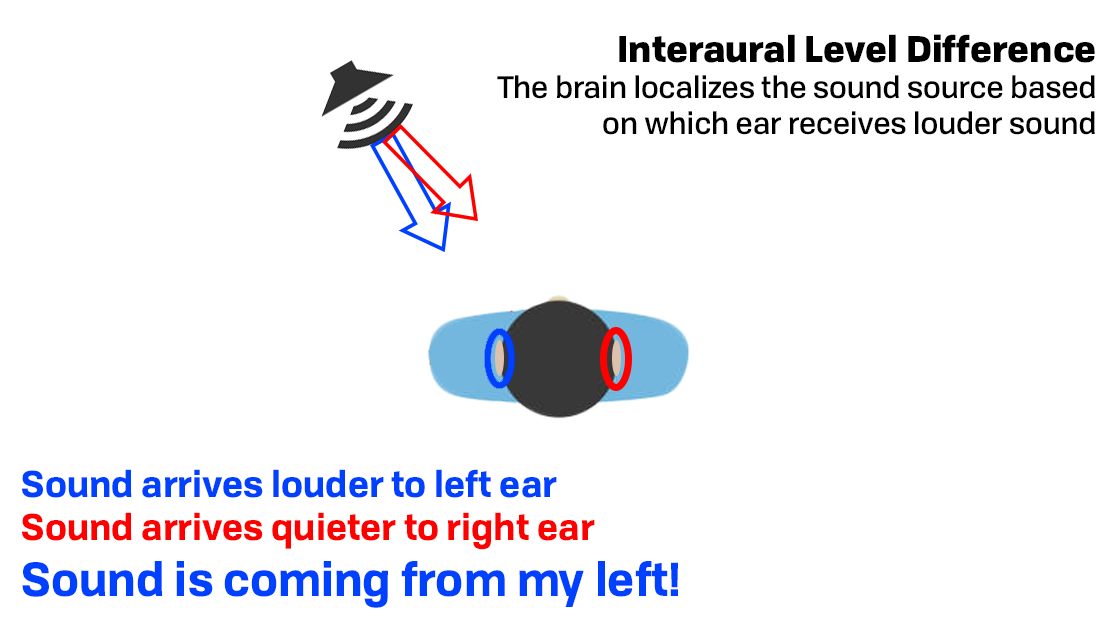

Fig. 4: Interaural Level Difference explained

Interaural Level Difference (ILD) is even simpler: The ear closest to the sound source will receive a louder sound than the ear that is farthest from the sound source.

Again, the brain unpacks this unconsciously as part of the larger localization process in real life. This means we don’t consciously hear “this sound is 3dB louder in my left ear than my right ear,” but instead “the sound is coming from my left.”

Head-Related Transfer Functions (HRTFs)

Sounds emitted from sound sources in the real world—whether they be speakers, instruments, or people—are transformed by your body, neck, head, and ears before they reach your eardrum. How these sounds are transformed depends on your specific anatomy, as well as the angle and elevation of the sound source relative to your ear.

Say we’re in a completely anechoic chamber, where we’ve placed a speaker that measures flat with a traditional measurement microphone.

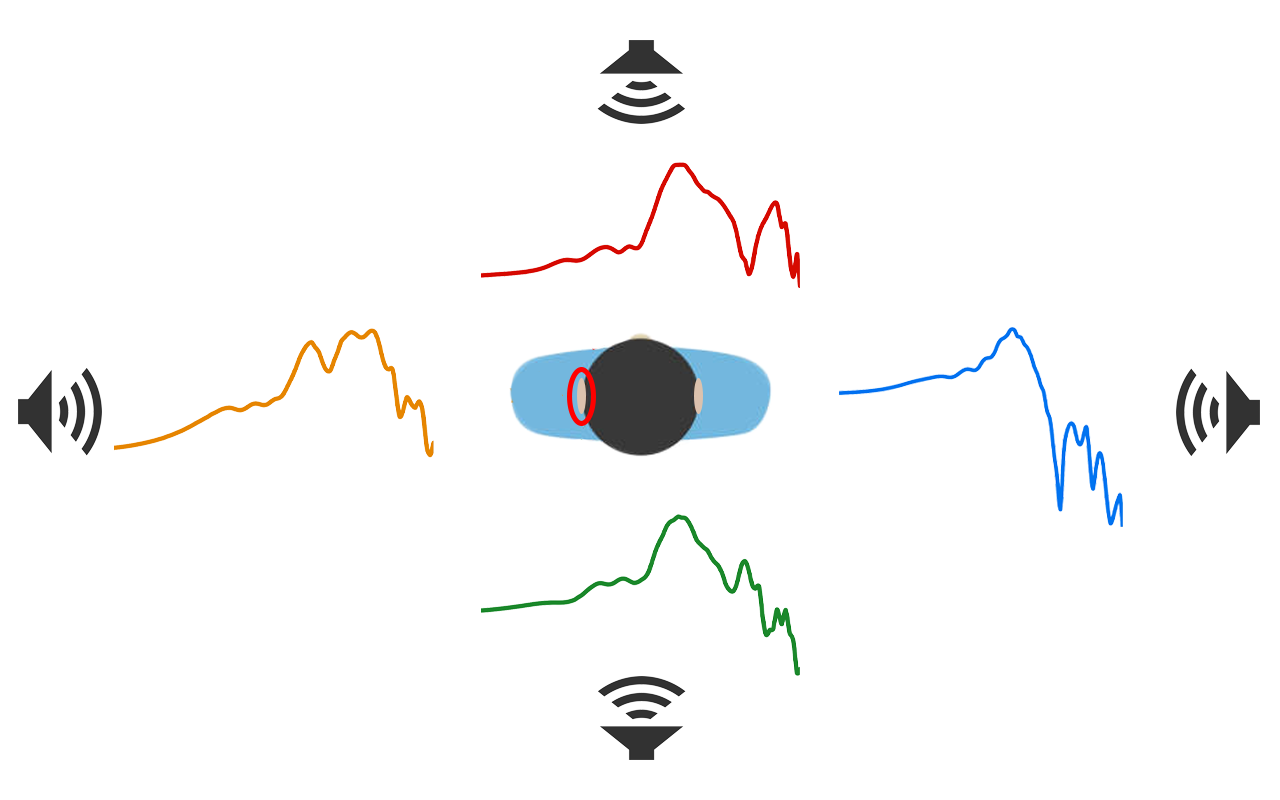

Fig. 5: 0º, 90º, 180º and 270º Free Field HRTFs of the B&K 5128, measured at the left eardrum (circled)

If this flat speaker is measured at different angles relative to the left ear of a listener—or in this case, a B&K Type 5128 Head and Torso Simulator (HATS)—we would see measurements taken at the left eardrum look like the above curves. The speaker placed directly in front of the head would look like the red curve, and the same speaker when placed on the opposite side of the head to the ear would look like the blue curve, etc.

These frequency response transformations—called Head-Related Transfer Functions (HRTFs)—exist for every angle and elevation around you, and these transformations are always affecting incoming sounds for both of your ears in the real world. We’ve been listening to sounds filtered through our HRTF for our whole lives, so we’ve grown accustomed to these effects and do not actively hear them.

So when we hear something to the left, we’re not only hearing the sound arriving first at our left ear (ITD), or louder at our left ear (ILD). We’re also hearing specific frequency response cues in both ears that our brain has correlated to leftward positioning.

As with ITD and ILD, we don’t consciously hear “wow, the sound at my left ear has a lot more upper mids and treble than my right ear.” We hear “the sound is coming from my left.”

What this means is that we don’t hear massive swings in frequency response arising from where sound sources are located, because our brain is constantly subtracting these frequency response cues from our perception as part of our inherent localization system.

With speakers for example, we hear past any of the chaotic-looking HRTF-based frequency response cues shown in Fig. 5, only hearing “the tone and location of the speaker itself.”

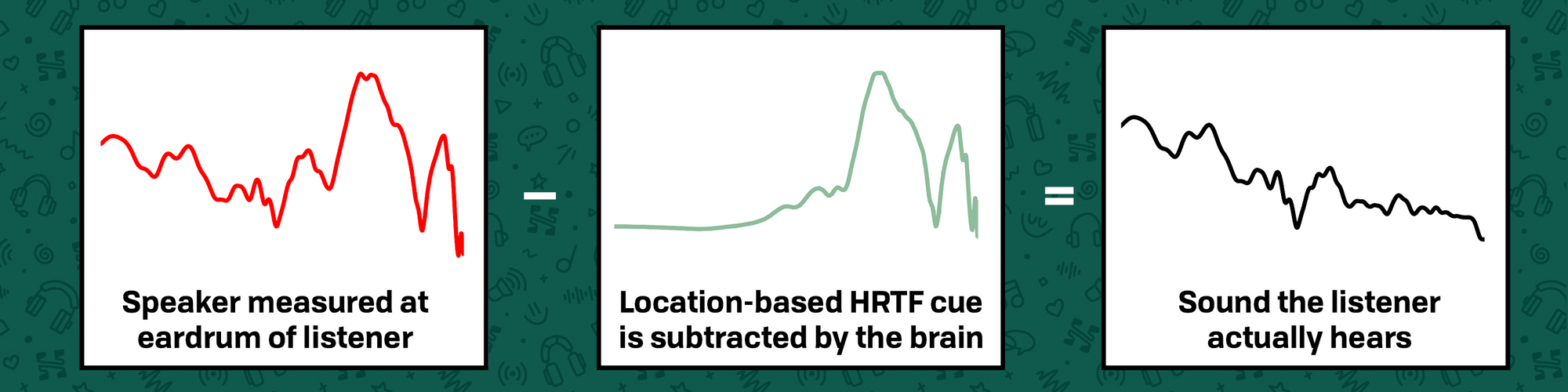

Fig. 6: HRTF Frequency response cues being subtracted from perception via the brain’s inherent localization process

The incoming frequency response at our eardrums is almost certainly very weird after going through the transformations of our HRTF, but our brain subtracts these colorations from our perception, leaving us with “the tone color and location of the sound source.”

Reflections

The arrival of sound at our eardrums is almost never just the sound directly emanated from a sound source. What we usually end up receiving at our ears is a combination of the “direct” sound from the sound source, as well as reflections—or “indirect” sound. Indirect sound arises from the direct sound bouncing off of surfaces like walls, floors, & ceilings, and then arriving at our ears.

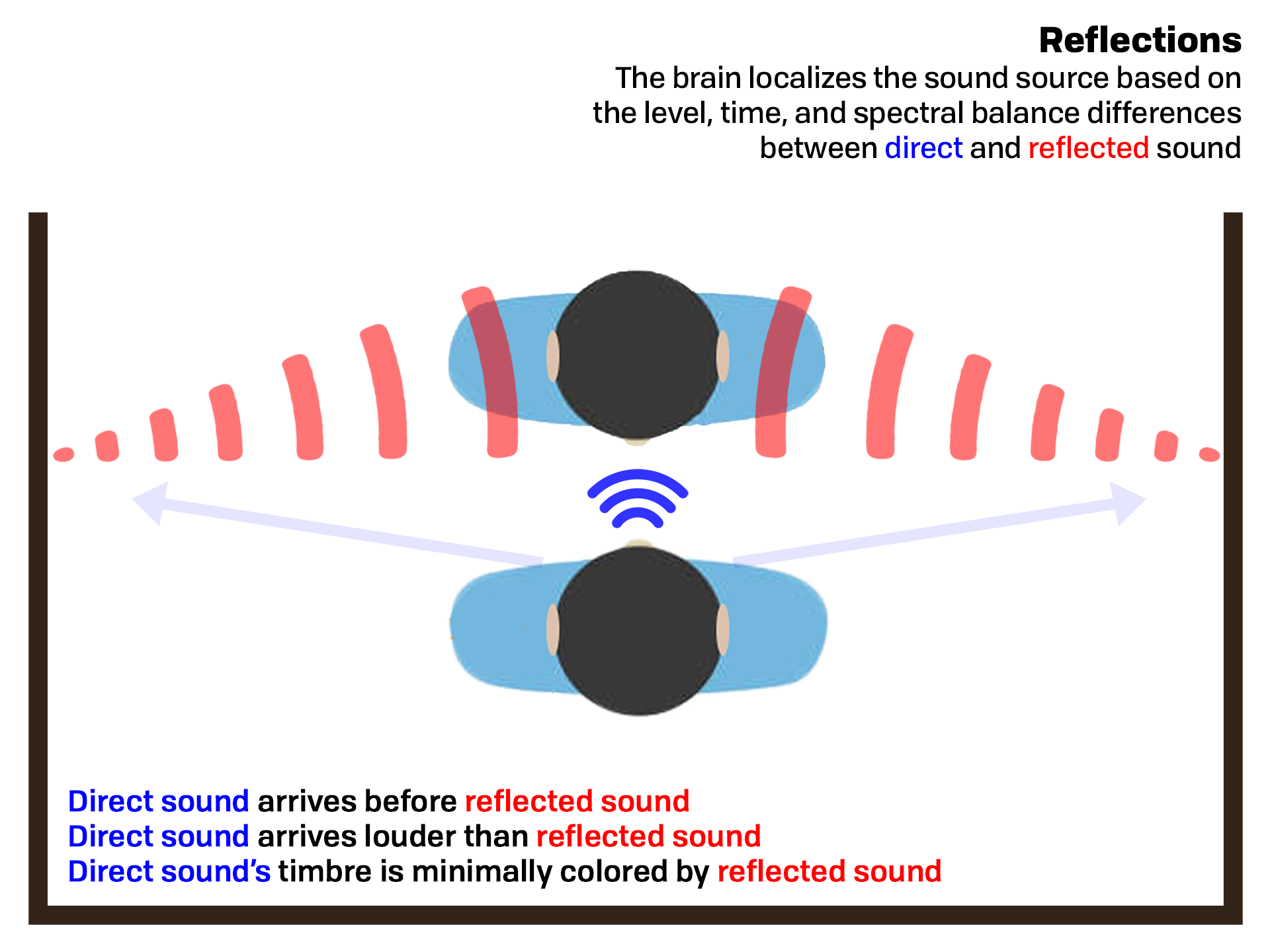

This phenomenon entails time and level differences, except this time it’s timing and level differences between the direct sound component and the reflected (indirect) sound component.

Reflections will always arrive later to your ear than the direct sound component, and they will generally* arrive at a lower level as well. Additionally, the direct sound and indirect sound are almost always spectrally different; the tone of reflections is almost never exactly the same as the tone of the direct sound.

Thus our brain’s localization process is often taking reflections into account in understanding where sound sources are located, specifically in terms of how we understand distance. To visualize this, let’s imagine you and I are in a concert hall:

Fig. 7: Direct and indirect sound at a short distance

If we’re both standing on the stage about 1m apart, and the closest wall to us was 5m away, the direct sound from my voice would arrive to you significantly earlier than the reflections off the walls, because the distance between us is smaller than the distance between you and any of the walls.

The direct sound from my voice would be significantly louder than the reflected sound as well for the same reason, which means how you perceive the tone color of my voice would not be drastically altered by the reflections of the room either.

All of these factors would be unpacked by your brain to help you understand that I am relatively close to you, even if your eyes were closed. However, now imagine I’m on the same stage, but you’re sitting in the upper mezzanine about 30m away:

Fig. 8: Direct and indirect sound at a long distance

The arrival time of the direct sound would be much more similar to the arrival time of the reflected sound, since the distance between you and the closest wall is now more similar to the distance between you and I.

The direct sound would also be quieter due to distance, while the total energy of reflected sound might not change at all (or even get louder). Your perception of the tone color of my voice would also be more impacted by reflections, since reflections are contributing more to the overall makeup of the sound energy arriving at your ears.

The result of all of these differences—smaller difference in arrival time between direct and indirect sound, higher proportion of indirect sound to direct sound, and tone color being more impacted by the indirect sound—would almost certainly lead to me being perceived as farther away from you.

Reflections are a bit different than the other things I’ve mentioned, because we actually can consciously hear and separate indirect and direct sound rather well, unlike the ITD, ILD, and HRTF stuff. However, it’s still important to note how these reflections ultimately impact our sense of perceived distance, and thus have a part to play in how we localize sound sources in real life.

Why All Of This Matters

Because the thing is… once you’ve left the real world and have moved to “sound being produced by headphones,” NONE of the above happens.

- Headphones don’t have ITD, because both channels play simultaneously.

- Headphones don’t have ILD. When they do, it’s channel imbalance, and we hear it as channel imbalance.

- Headphones do not have a full HRTF interaction; they’re not interacting with your full anatomy at a changing angle or elevation based on your position and orientation.

- Headphones do not have meaningful reflections within the audio band, because the distances inside an ear cup are small enough that reflections only occur in the uppermost treble (and they’re basically imperceptible).

All of the factors that play a big part in how we localize sound in the real world do not exist in headphones. So if headphones don’t have any of the factors that form the primary makeup of localization, then they can’t really have “actual soundstage.”

And if passive headphones can’t have actual “soundstage,” well what do they have?

Diffuse Localization

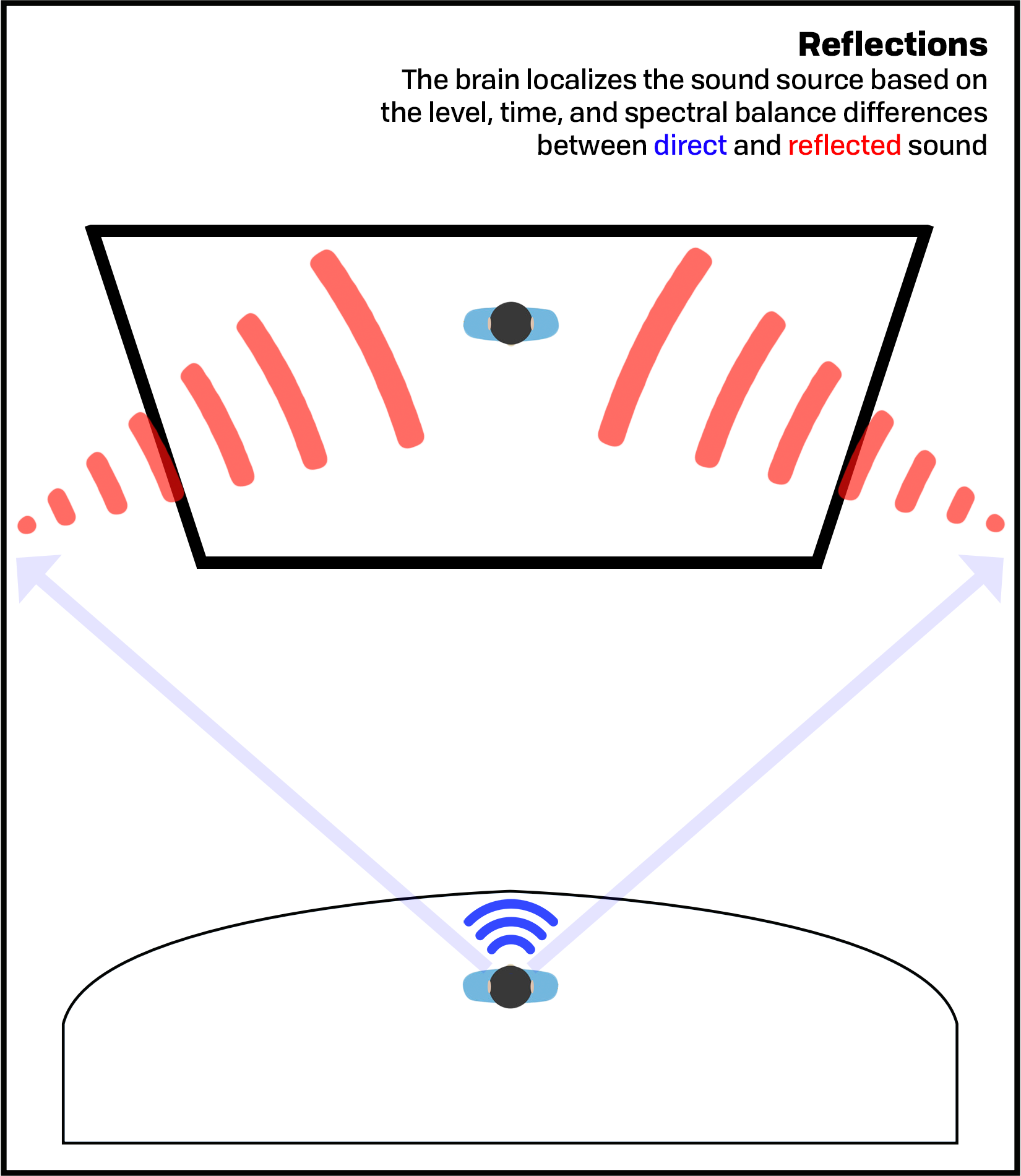

Headphones are affixed to your head with a constant distance, angle, and elevation relative to your ear. Therefore, a headphone’s frequency response won’t change dynamically based on its location relative to your head, neck or torso: it’s neither interacting with them, nor changing its location relative to them.

Because of that invariance of positioning, headphones are devices of “diffuse localization.” And to understand what that means, one needs to understand what a Diffuse Field is.

A Diffuse Field is a circumstance wherein sound is equally distributed at all points of the room and arrives equally from all directions at all frequencies. This means that, unlike the above examples where the location of the sound source causes a difference in frequency response, the frequency response at any one position in the room would be the same as any other position.

So imagine you’re in a Diffuse Field, and there are a few flat-equalized speakers scattered about playing white noise. Regardless of where you are or where you’re facing in the room, the sound would be identical.

Fig. 9: Diffuse Field’s invariability of location-based timbre

Due to the absence of any frequency response cues to localize the sound sources, the sound would seemingly be emanating from either everywhere, nowhere, or from inside your own head. This “diffuse localization” would be unlike any situation you’ve ever experienced in real life… but this is exactly what happens with headphones.

Headphones have the exact same timbral invariability that listening in a Diffuse Field would have; regardless of where you’re facing or how your body is oriented, the sound remains the same. This is “diffuse localization.”

Fig. 10: Diffuse localization in headphones

Diffuse localization, in addition to the fact we’re not getting ILD, ITD, reflections, or meaningful crossfeed with headphones, means that pretty much all of the important sonic information for how the brain localizes real-world sound is missing.

If the brain had all of the necessary information for localizing incoming sound from a headphone—HRTF cues, ITD, ILD, reflections etc., and they all changed dynamically as your head moved—it would have enough information to localize sound properly and we would actually get soundstage as we experience it in real life.

Instead, with passive headphones we are only hearing the frequency response as it exists on our head. And this is why we use Diffuse Field as a baseline HRTF for headphones.

So that’s HRTF… but what about the “PRTF” that’s been said to have an effect on soundstage by sites like RTINGS?

PRTF: Why RTINGS is Wrong About Soundstage

RTINGS is widely-known in our hobby for having a large library of headphone measurements, but they’ve garnered significant criticism for some of their scoring methods, as well as how they evaluate some individual qualities of headphone sound. Their metric for assessing soundstage is perhaps the most vexing score out of all of them.

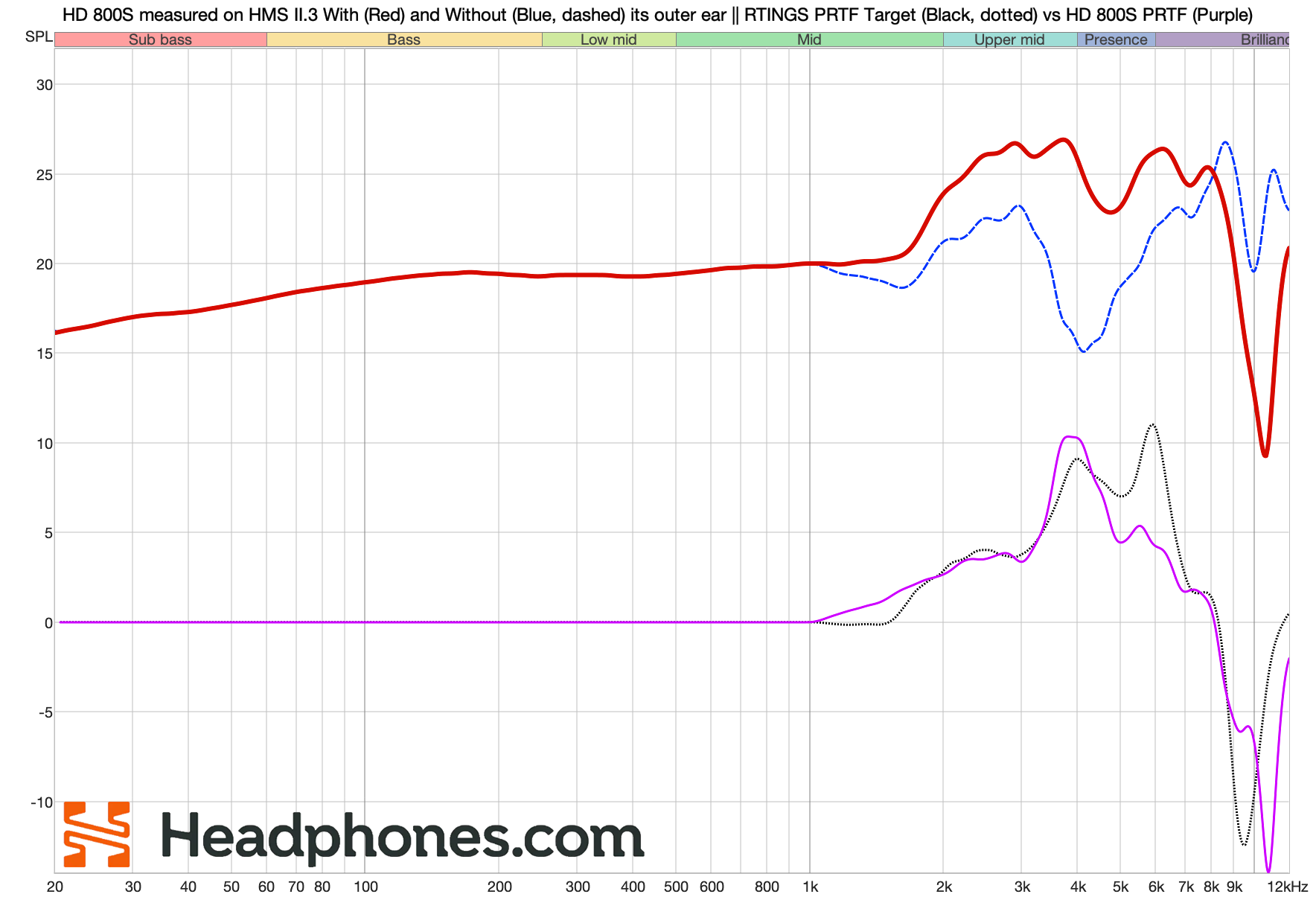

At the core of this approach is what they call “PRTF,” or “Pinna-Related Transfer Function.” PRTF per RTINGS is the frequency response difference between a sound source measured at the eardrum of their HEAD Acoustics HMS II.3 HATS with and without its outer ear.

Put simply: PRTF = (Frequency response measured with the outer ear) - (Frequency response measured without the outer ear).

Fig. 11: PRTF of Sennheiser HD 800S, measured by RTINGS

RTINGS attempts to predict a headphone’s soundstage in part by measuring how a headphone’s PRTF (Fig. 11, purple) compares to the PRTF of a flat speaker placed at a 30º angle in front of the measurement head (Fig. 11, black). How well the headphone’s PRTF matches the speaker’s is what RTINGS calls “PRTF Accuracy,” with more accuracy apparently being indicative of better soundstage.

There are myriad problems with this. For one, we know that headphones are devices of diffuse localization, therefore any coloration based on a specific sound source location (like a speaker placed 30º in front of the listener) is going to be heard as coloration in headphones, not as a localization cue.

But RTINGS also doesn’t account for the inherent differences between individual heads. We at Headphones.com know this to be a confounding variable, but in case it’s not clear why, let’s quickly discuss it.

Heads are Really Different

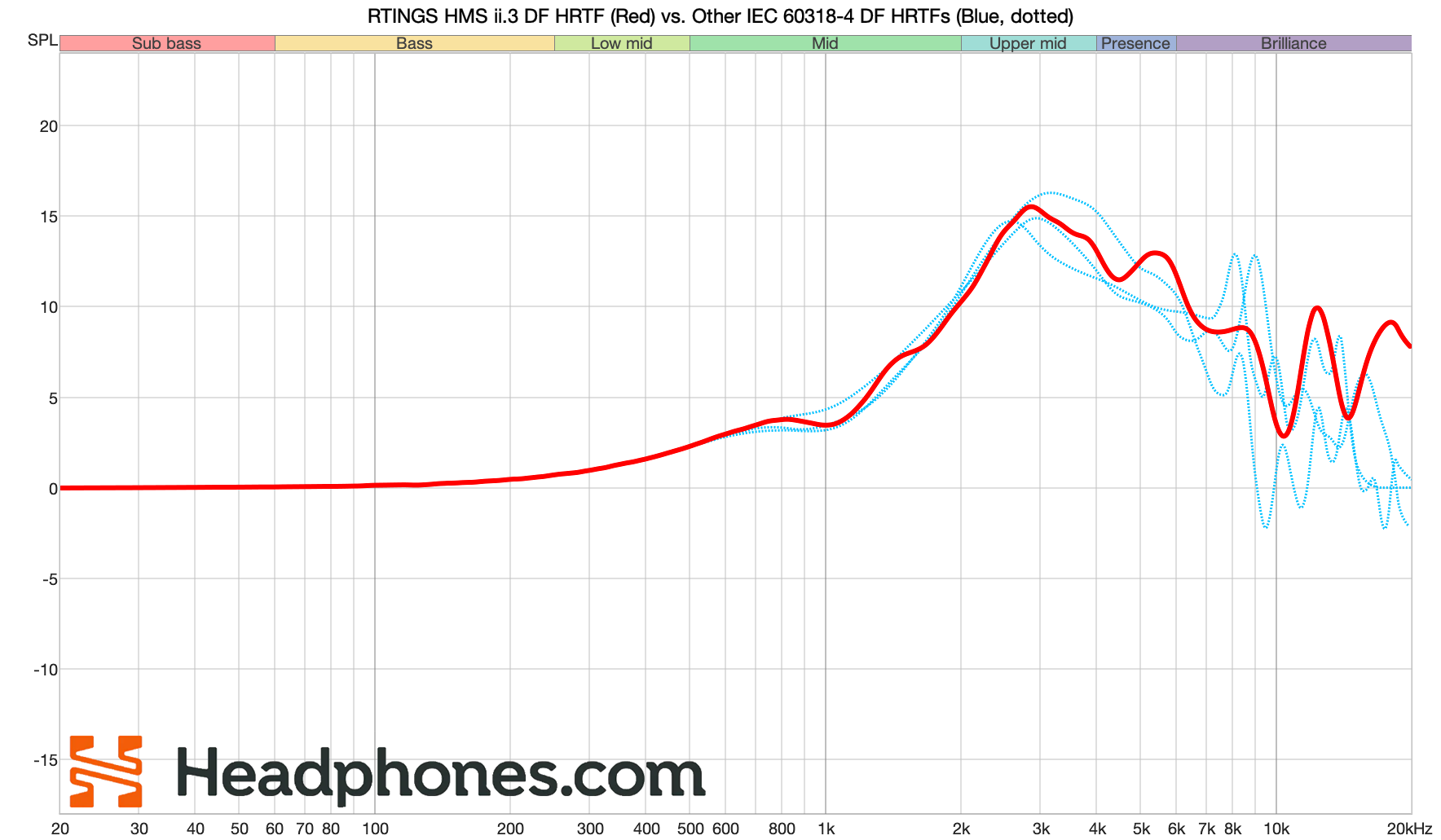

RTINGS uses the specific outer ear of their HMS II.3 HATS to judge the soundstage of all of the headphones they test. The problem is that heads—even heads built with the same industry standard in mind—measure quite differently in the treble, both with speakers and with headphones. And the treble is where RTINGS assesses and scores “PRTF Accuracy,” which they say determines “soundstage size.”

Fig. 12: DF HRTFs of all IEC 60318-4 compliant Head and Torso Simulators in blue, with RTINGS’s HMS II.3’s DF HRTF in red.

This is 4 different heads measuring a flat speaker in a Diffuse Field—which one of these sets of vastly different treble cues is likely to best convey what audiophiles call “soundstage size”?

Which one of these sets of features is even most similar to the average listener?

Well, we don’t know. And that’s the problem.

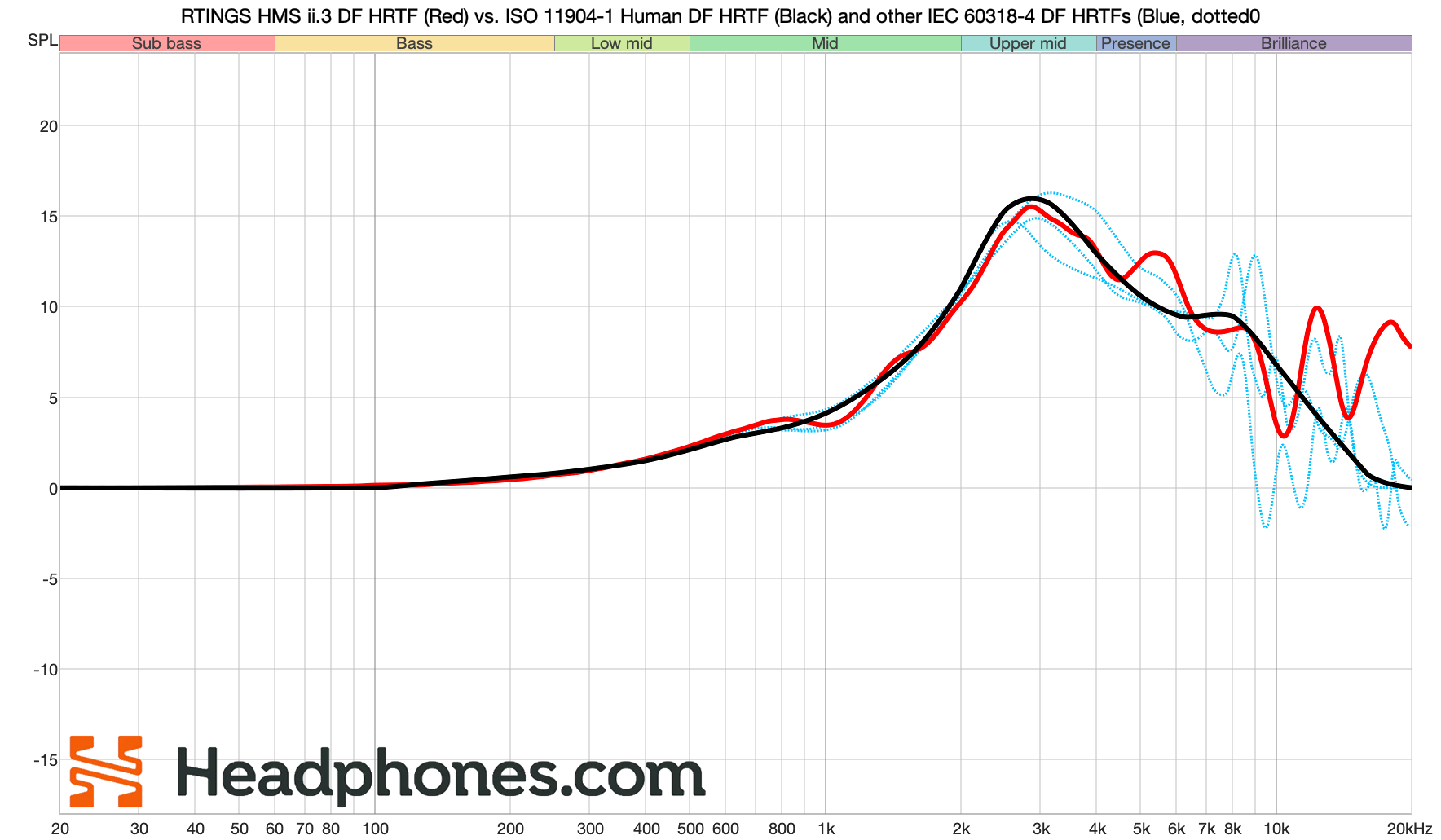

To claim correlation between the HMS’s ear to those of the wider population of listeners makes a lot of assumptions that simply aren’t very robust… especially when there’s data to suggest the ears RTINGS uses are the most divergent from an average human compared to the others (see Fig. 13).

Fig. 13: RTINGS HMS II.3 DF HRTF in red compared to ISO 11904-1 (Human) DF HRTF in black.

PRTF Distance

Unfortunately, this flawed approach also colors the rest of the metrics folded into this score, like “PRTF Distance,” which attributes perceived soundstage distance and elevation to the depth of a single frequency response cue—a 10 kHz dip—on a headphone measured with their HMS II.3.

But what if this dip is specific to this rig? Doesn’t that mean it probably isn’t a good predictor of “soundstage distance and elevation” and is moreso just a feature of this particular measurement fixture?

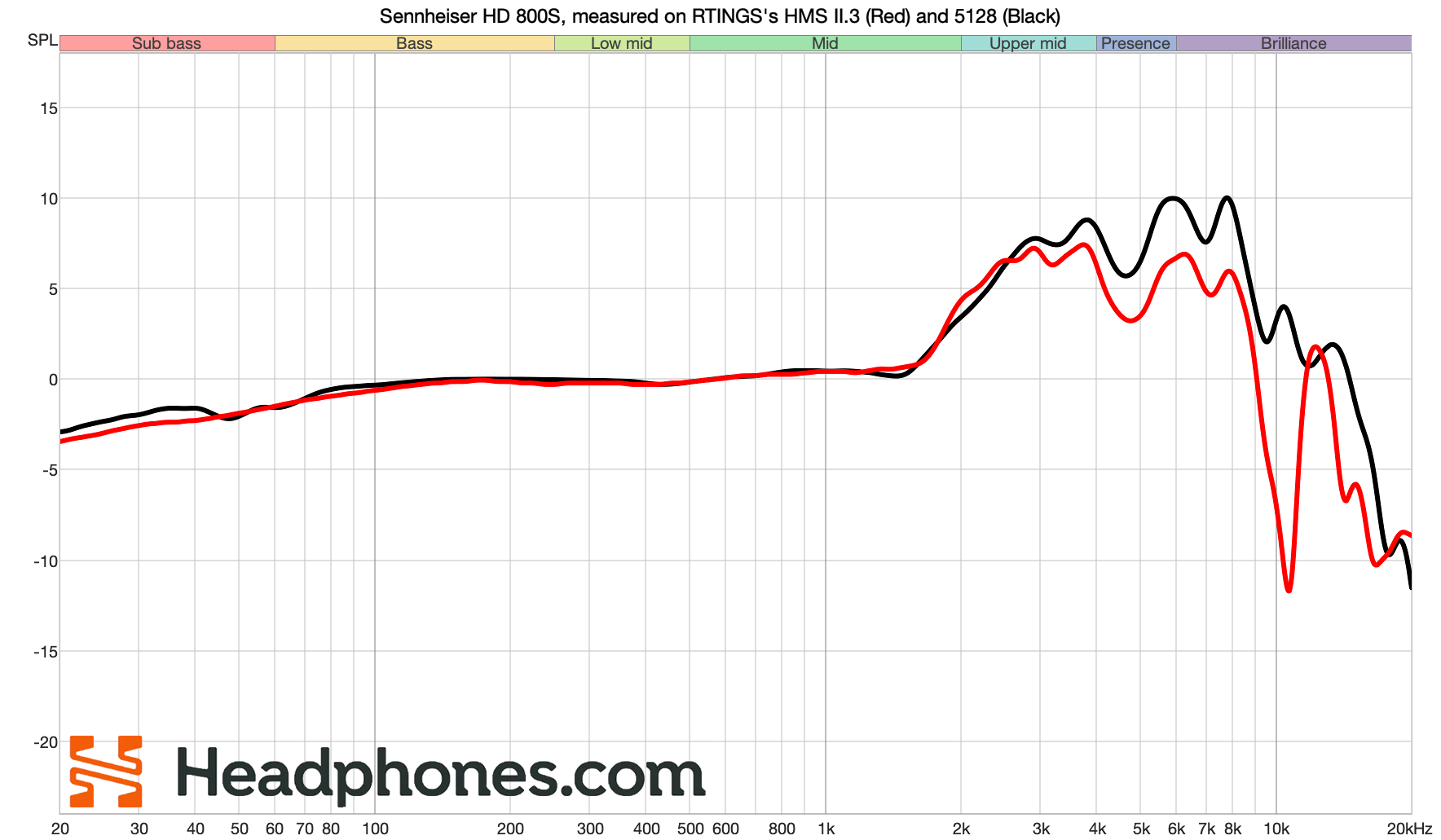

Fig. 14: RTINGS’s HD 800S measured on their HEAD Acoustics HMS II.3 and their B&K 5128 Credit: RTINGS

Indeed, it seems that is the case. These are the exact same headphones measured on RTINGS’s HMS II.3 and their B&K 5128, and it seems the 10 kHz dip they attribute good “PRTF Distance” to is entirely a feature of the HMS rig in particular.

Not only is this a problem for the HMS vs. another rig, but this feature may not even exist on human heads, as shown in Fig. 15.

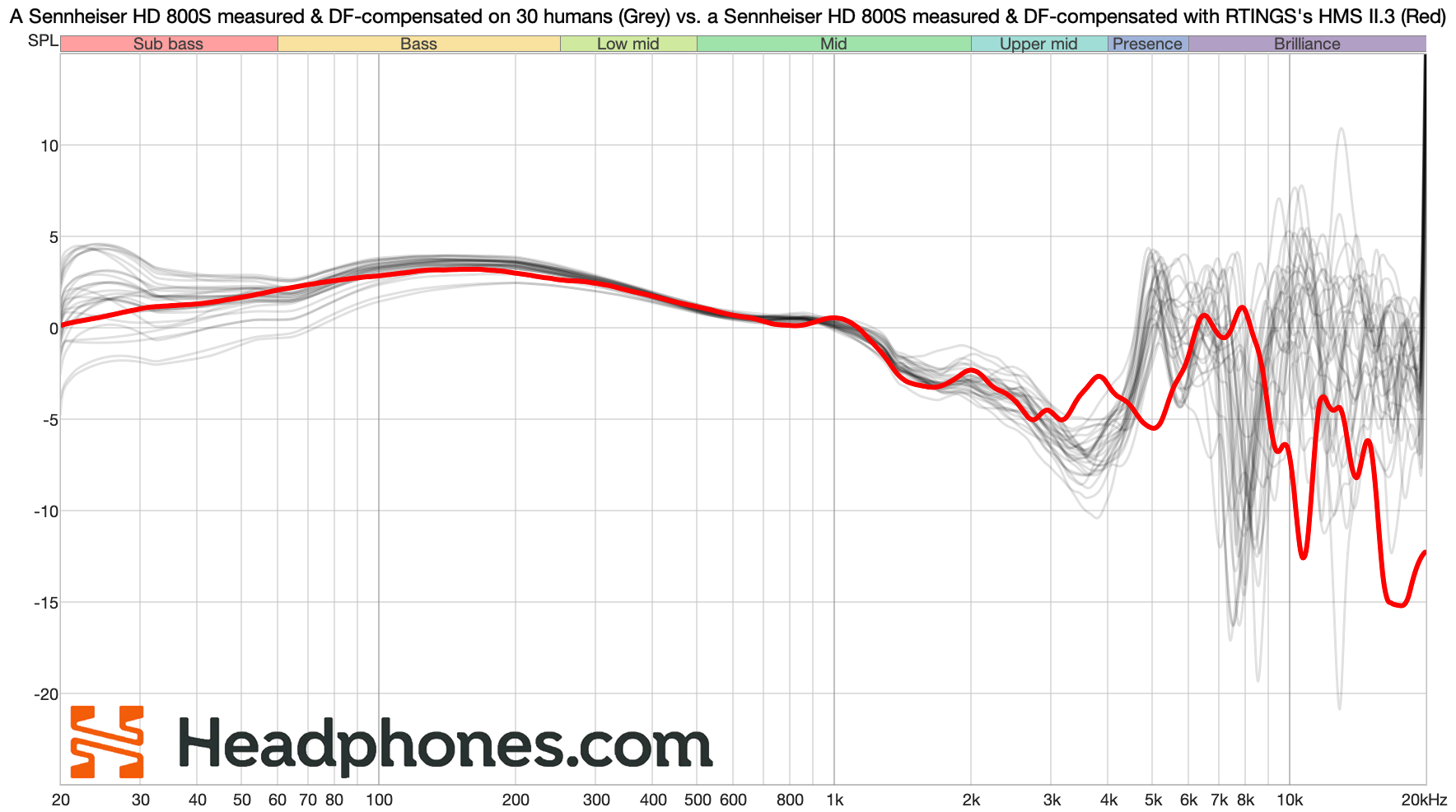

Fig. 15: An HD 800S measured on RTINGS’s HMS II.3, compared to an HD 800S measured on 30 humans. All measurements are compensated to the Diffuse Field HRTF of the head they were measured on. Credit: Fabian Brinkmann et al. “The HUTUBS head-related transfer function (HRTF) database”

The 10 kHz feature RTINGS is assigning significance to here is not only rig-specific, but also unlike the typical response a human may get with this kind of headphone. This may be why we see the HMS II.3 and other heads adherent to this standard only being rated for accuracy up to 8-10 kHz or so.

This serves as another indication that assigning too much significance to treble features of the HMS II.3—or any other HATS, for that matter—probably isn’t a good idea.

This Score is Actively Harmful

RTINGS claiming that frequency response colorations from one head is somehow a robust predictor of all people’s experience of headphone soundstage is pretty obviously false once the claim is challenged with data. The problem is that no one has publicly given the counterargument until now.

What RTINGS has offered here is an under-researched, scientific seeming explanation about something that is much more complex than a single measurement on a single head can ever hope to capture.

The problem is that because it seems scientific, many people buy into it. I know I did, which is why I bought a Philips Fidelio X2HR and ended up wondering why they didn’t have the incredible soundstage that RTINGS’s scores implied they would.

RTINGS’s Soundstage metric furthers misinformation regarding what soundstage is, and at times directly leads people to make purchase decisions based on faulty assumptions and bad data. This has already led to some consumers being unhappy with their purchases, and unfortunately, this is likely to continue unless they amend or remove this metric.

This approach to scoring Soundstage also goes some way to denying people who have divergent experiences. If someone ended up really enjoying the soundstage of the Sennheiser Momentum 4 Wireless, RTINGS’s fairly negative score for this headphone’s “soundstage” might end up causing the listener to erroneously second-guess themselves.

This rubric also makes no attempt to account for the differences in perceived soundstage of IEMs. Even though these differences are quite minor to me, for some people it seems the differences are significant enough to note (at least, if their reactions are anything to go on).

In my view, the audiophile consumer space would be better off if RTINGS removed this metric entirely. Numerous people factor this incredibly flawed metric into their decision-making when making a purchase because it seems legitimate… but it isn’t.

Ideally, people wouldn’t be taking it seriously, but consumers take soundstage seriously enough that any supposed explanation is something they’re really going to gravitate towards. Unfortunately most audio consumers simply don’t know that what they’re being shown is actually just misinformation bolstered by the implied authority of data.

If It’s Not Soundstage, What Is It?

While headphones don’t have “actual soundstage,” they do have a psychoacoustic effect of “spaciousness.” I don’t want to make it seem like I’m saying this effect doesn’t exist. It does, I’ve heard it plenty of times. But almost every factor that informs this perception of spaciousness is separate from our normal process of localizing sound sources. It is also incredibly subjective. This is why I’d say readers should take anyone and everyone else’s reports regarding spaciousness with a truckful of salt.

People are often hyperbolic about the magnitude of this quality’s effects. While I don’t think that’s necessarily a bad thing—people enjoying themselves and getting excited about headphones is a good thing!—in the absence of actual information about what soundstage is & isn’t, the exaggeration of soundstage’s effect in headphones has led to a ton of confusion in this hobby, especially for newcomers.

To my mind, there are three big factors that may contribute to the impressions of soundstage we get with headphones and IEMs. So let’s go over the questions these factors pose to determine how exactly they may contribute, as this will help us understand everything that needs to be considered when someone else claims a headphone has “good soundstage.”

Because soundstage is not some elusive acoustic quality that cannot be measured as many may claim, but rather a confluence of factors that we all agree exist and matter quite a bit.

Important Disclaimer!

Since I want to talk about these questions, I should also disclaim that the rest of the article is purely based on my personal understanding and experience of headphones. We are now leaving the realm of “generally agreed upon science” and entering the realm of “just my experience,” so please be aware: there isn’t much data to back up the following other than my years in the hobby listening to and discussing headphones.

I will be using the audiophile terms in the way that makes sense to me given my understanding. No scores or analytical metrics are going to be introduced because of these observations. Readers are extremely welcome to disagree, but I implore all to keep an open mind in case any of these things actually do help to explain or aid in communicating their experience of soundstage.

Openness?

While I’ve no hard data to back up the correlation I see between a headphone’s openness and listener reports of soundstage, the correlation is evident nonetheless.

People tend to report open-back headphones as more spacious or having better soundstage than closed-back headphones or IEMs, often citing differences in overall “stage size” and a lack of claustrophobic crowding to go along with it. This makes intuitive sense to a lot of people, such that I’ve only begun to see this paradigm questioned fairly recently.

While openness itself—or the lack thereof—does actually seem to have consistently measurable traits in frequency response (like many closed back headphones and IEMs having a dip somewhere in the low midrange), it also has sonic benefits separate from frequency response that could affect our perception of soundstage.

The occlusion effect—the feeling of uncomfortable low-frequency amplification that arises when the ear canal is fully or partially sealed—is less prominent the more open the path to the eardrum is. With IEMs we typically get a lot of occlusion effect, with closed headphones we get a little bit, and with open headphones we get nearly none.

We can detect this effect even when no music is playing, so it’s not really a quality of the sound of the headphone itself as much as a quality of the acoustic condition our ear is placed in when we wear the headphone.

Because our ears can detect this effect regardless of music playing, it could absolutely also affect our perception of the music playing within that space.

Our ears being in a condition that’s more similar to the unoccluded environment when listening to sounds in the real world—often called “free air”—could affect our perception of the sound to be more akin to sounds in the real world, and vice versa; hearing more of the occlusion effect could cue our brains to know that what we’re listening to is very much so not “sound arriving at our ear through free air.”

How big of an effect this has on soundstage specifically is hard to quantify, and has yet to be researched fully. But I wouldn’t be surprised if it has a bigger effect than people currently give credit for.

Comfort?

Regardless of writing an entire piece in defense of comfort, I still don’t see nearly enough talk about this aspect of headphone performance in our community. But in this case, I think good comfort may benefit our perception of soundstage as well.

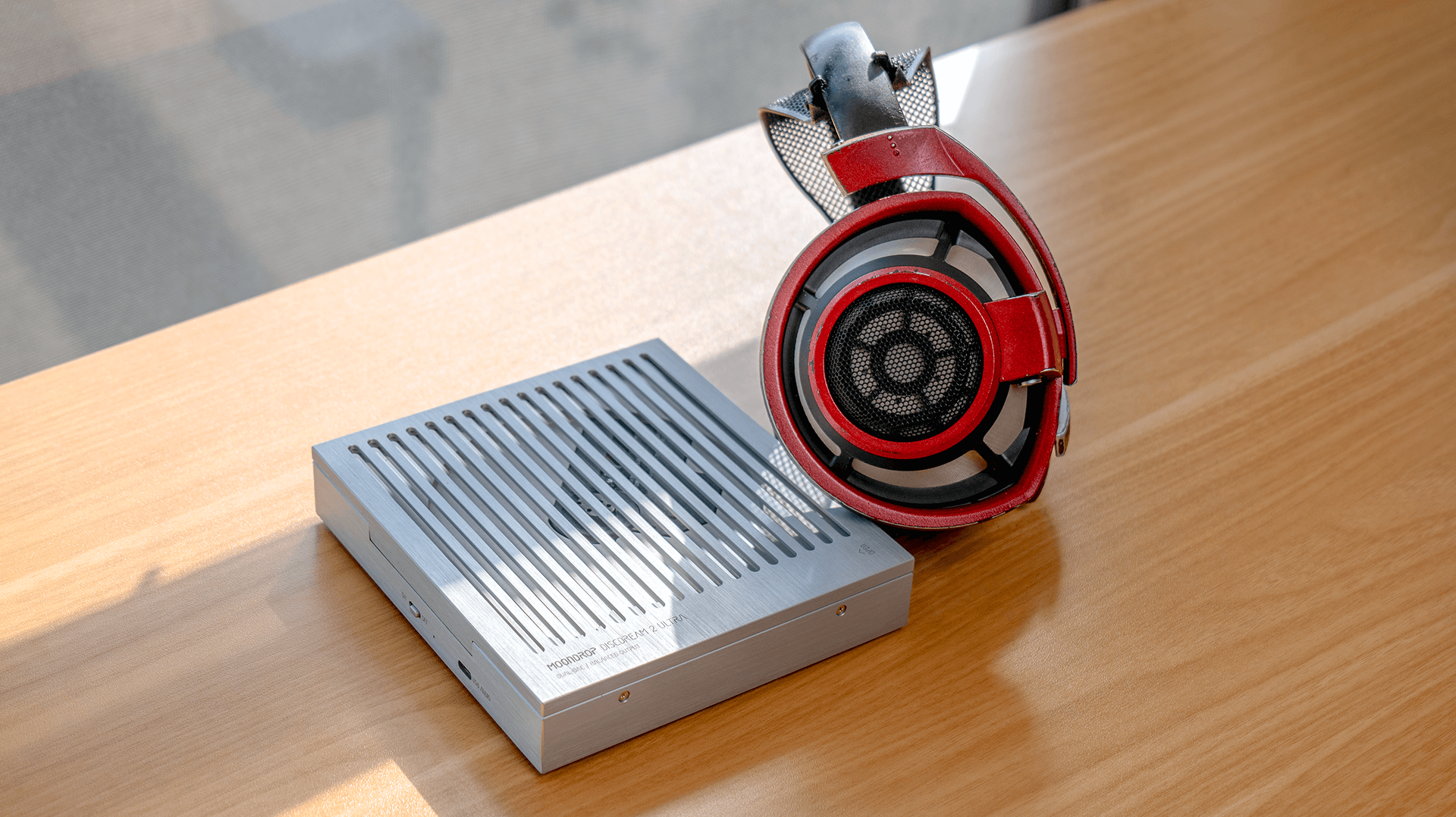

I think there’s a case to be made that headphones touching your ears are less likely to be perceived as spacious. This is one guess I have as to why the Sennheiser HD 800S with its gargantuan earcups are near-unanimously regarded as one of the most spacious headphones out there, while things like the Sennheiser HD 600 with its rather shallow cups and vise-like clamp are almost never claimed to be similarly spacious.

The HD 800S has one of the largest inner ear cups I’m aware of, such that when most people wear one their ear usually isn’t touching anything. With HD 800S, some of the tactile sensations telling users they’re wearing headphones are reduced, thus the listener may actually find it easier to ignore the headphone being there. This could, in theory, allow for more efficient immersion into the music they’re listening to, and perhaps with that ease of immersion, “better soundstage.”

If you’re like me and find the occlusion effect physically uncomfortable, then that’s even more reason to think comfort could play a role. The lack of this effect would be more physically comfortable, and could therefore be beneficial to our sense of immersion (and therefore, experience of soundstage).

The theoretical contribution of these factors—comfort and openness—hold true when one thinks about “earspeaker” headphones like the RAAL SR1b or the AKG K1000 as well. As headphones that exist in a totally free-air acoustic environment and don’t touch the outer ear at all, they’re similarly regarded as some of the most spacious headphones out there—despite being tuned very differently than something like an HD 800S.

Of course, these reports based on comfort are all sighted. I’d be interested to see testing done to determine how much this affects people’s reports in blind tests when certain controls are in place. My biased opinion is that again, comfort likely accounts for more than people currently give credit for, though of course it’s unlikely to be the only factor responsible.

Frequency Response?

Now that we’ve talked about the non-sound reasons for people perceiving certain headphones as spacious, it’s probably worth diving into the reasons why frequency response likely plays a dominant role in the perception of soundstage. Especially because stereo music really only exists in one dimension from left to right… So where does all of the other “headphone presentation” stuff like width, distance, and lateral image separation come from?

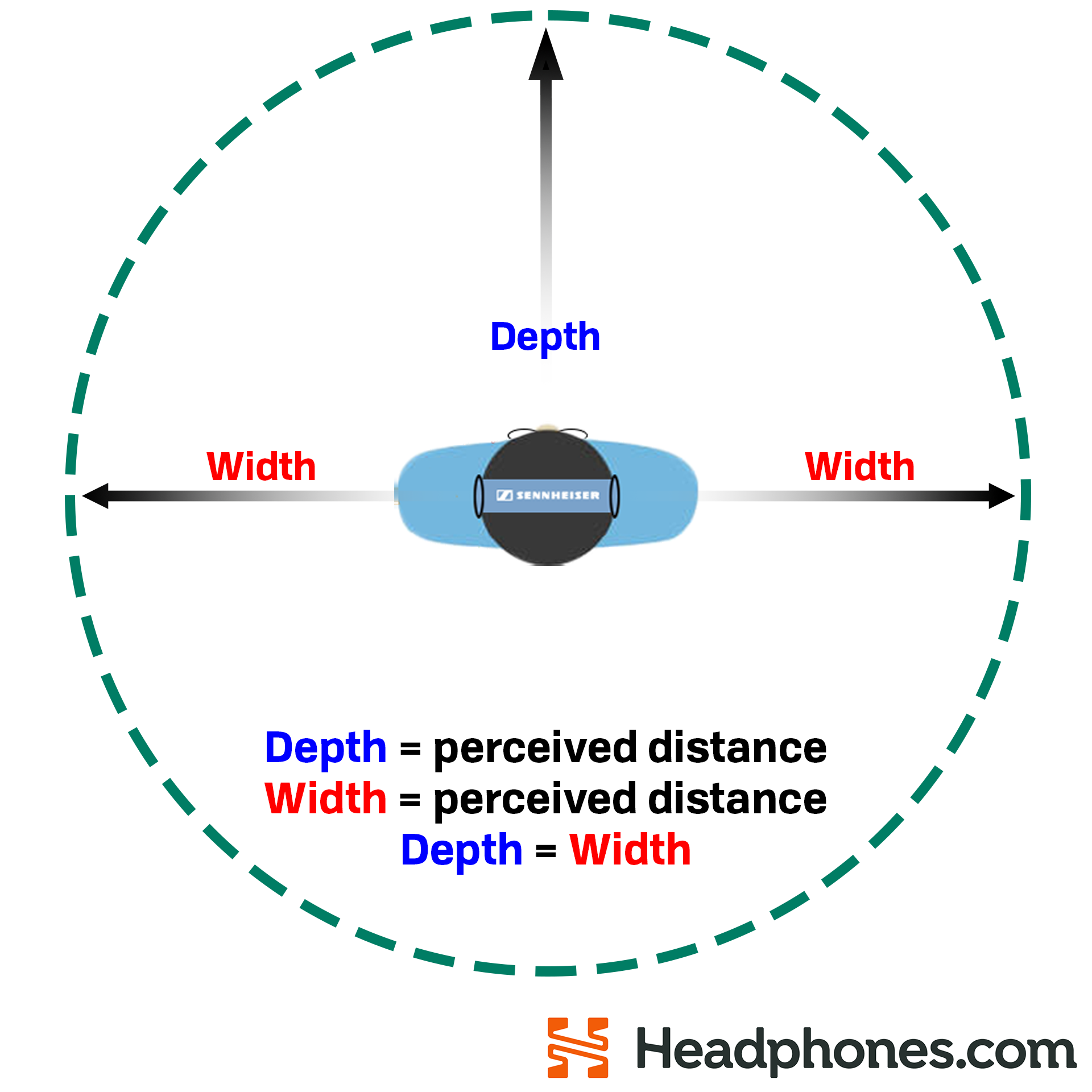

Width

In headphones, distance and “width” as audiophiles tend to refer to it actually exist on the same axis—radial distance from the centrepoint (the listener). The only difference is that “distance” is usually thought of regarding elements panned towards the front, whereas “width” is the distance of elements panned to the sides.

Fig. 15: Perceived depth and width shown as a function of perceived axial distance from the centrepoint (the listener)

While the distinction between “left-right” and “front-back” axis might seem intuitive and better representative of the experience to some, it contributes a ton of confusion because stereo music doesn’t really have a front-back axis. Additionally, I’ve rarely if ever perceived something to be good at one but not the other.

So I’ll be simplifying things here, and folding them both under the quality of “distance” in the hopes that it simplifies understanding for people.

Because stereo music only exists on one axis from left to right, to get any sense of distance trickery is required. To get a sense of what kind of trickery is even possible, it’s instructive to look at how recording engineers make elements of their mixes sound distant, and what tools in this toolkit of theirs are actually present with headphones themselves.

Distance

David Gibson’s “The Art Of Mixing” is a seminal text regarding the creation of stereo music, and a glance at the cover may even be enough to get audiophiles excited about it: visualizing or communicating stereo music in headphones as being akin to elements in 3D space is something that audiophiles tend to enjoy.

In this book, as well as in the delightfully silly video accompaniment, Gibson talks at length about how to visualize stereo mixes as arrangements of elements in 3D space, and does so in a way that will make sense to both the people making music, as well as to ordinary listeners.

Fig. 16: David Gibson demonstrates how volume and distance may be linked visually

Gibson draws a critical connection between volume and the perception of distance in stereo music. This link between perceived distance and volume has also been studied academically regarding how people’s understanding of distance in the real world is informed by volume of the sound source. While this is still an area of ongoing study, level of the sound and distance of the sound is a correlation that has been observed by multiple studies, and it’s rather intuitive: things that are farther away are generally also quieter.

As we’ve discussed, headphones don’t really have interaural timing & level differences, or reverberation in the way real spaces do, which eliminates most of the tools a studio engineer would use to engender a sense of distance like echo, reverb, or time delays. However, headphones do have volume! In fact, they have different volume at every frequency, what we call “frequency response.”

And in my view, the relative volume differences between areas of the frequency response are extremely likely to play a part in how listeners perceive distance in headphones, because differences in volume between frequency ranges can often be heard as indicative of differences in distance between these ranges.

For example, many find that a significant frequency response difference between lower midrange and upper midrange—like the 2 kHz scoop found on Hifiman headphones or the Sennheiser HD 800S—engenders a sense of perceived distance. The difference in volume between these regions can often be perceived as a difference in physical distance between these regions’ portrayal of an instrument or voice.

Fig. 17: Headphones with the “soundstage” scoop

Say we’re listening to a solo male vocalist on an HD 800S: the lower midrange fundamentals may sound “closer” to the listener than the relatively quieter—and therefore more “distant”—upper midrange harmonics. Because of this, the sense of distance overall may seem more exaggerated than on a headphone where the lower and upper midrange are closer in perceived volume (and thus, closer on the plane of perceived distance).

This can be true of the opposite coloration as well. Some listeners report lower midrange scoops like the one found in Harman’s IEM targets resulting in more perceived depth, likely because quieter, more “distant” lower midrange fundamentals are perceived as farther away relative to the louder, “closer” upper midrange harmonics. Or, akin to the first example, they hear the generous bass shelf as bringing bass or drum fundamentals physically closer than their relatively distant lower midrange.

Regardless, contrast in SPL does seemingly equate to contrast in distance for some listeners.

Fig. 18: IEMs with the “contrast” scoop

This relationship of “difference = distance” can really hold true for any relationship between frequency regions… which is partly why it’s been difficult to point to any one frequency response feature as “the soundstage on a graph.”

As our approach to headphone measurements has evolved, we know that differences across heads can be shockingly large. This means we’re probably best served not searching for “the soundstage on a graph” as a single coloration, but instead as a type of contrast relationship between regions. We should be focusing on describing the listener’s experience instead of erroneously prescribing what is and isn’t going to have soundstage based on a feature on a graph.

My daily driver is a Sennheiser HD 800, and it is significantly less spacious after I’ve EQed its large deviations in frequency response away. EQ isn’t some magical soundstage-sucking tool of course, all I’m doing is affecting the frequencies coming out of the headphone, but this holds true for other headphones known for spaciousness that I have on hand like the Hifiman Edition XS.

Once any headphone I’ve ever used has been EQed to have minimal noticeable colorations across the bass, midrange, and treble, any illusion of extra contrast on the “close-to-distant” plane that isn’t inherent to the music is removed. Every sonic element is placed on the same plane of distance.

It’s important to note here that I haven’t any data to support how consistently certain colorations would be heard as “distance” by listeners.

Someone may well find the 2 kHz dip to have a negative effect on spaciousness. Instead of hearing “the distance between lower and upper midrange,” they may only hear “the cloying closeness of lower midrange”. Similarly, someone may also perceive the Harman IE target as “excessively shouty and in-their-face,” instead of hearing the contrast between low and upper midrange as indicative of distance.

The reason this isn’t widely acknowledged up to now is likely because many enthusiasts who don’t quite understand frequency response think one type of subjective perception can only be explained by one type of frequency response coloration. In actuality, if we want to better understand subjective effects like soundstage, we need to understand that many different types of coloration can be responsible for the same types of phenomena being reported.

A 2 kHz dip, a 200 Hz dip, or potentially any other coloration can be responsible for two people reporting the same quality of spaciousness. Just as two people hearing the exact same headphone can report a difference in this quality. This is why it’s important to understand and frame things—especially our own experiences—in terms of frequency response.

When we frame our subjective experiences both through the lens of “audiophile speak,” we could be talking about the same thing but using different words, or talking about a different thing and using the same words. But when we frame things in terms of frequency response—like saying “the 2 kHz dip sets piano attack nicely to the rear of the mix”—we give other people an anchor to understand what part of the headphone’s acoustic response on our head is actually contributing to our impression.

Through frequency response we can better understand our own experiences with soundstage, communicate them more efficiently, and perhaps even predict for ourselves what kind of headphone tuning will have the most enjoyable soundstage for us. But to get there, we will need to build a more robust understanding of headphone tonality, and this is a project I’m excited to embark on with our community and Headphones.com.

Image Separation

I’ve mentioned this a few times on our podcast The Noise Floor, but for those who aren’t regular viewers, what I will call “Image Separation” here is almost entirely down to frequency response for me.

And to be clear, I’m not talking about the feeling of distinctness between two instruments/voices in a mix that are panned directly on top of each other, which may very well be what some others would call “image separation.” What I’m referring to is the accuracy of stereo panning and stereo separation between elements of a mix on the lateral (left to right) plane.

Headphones are inherently a compromised situation, indelicately smushing the intended stereo panning done in speakers into a binaural listening medium. How much of the lateral placement, distance, and separation between differently-panned elements in the mix is preserved is what I mean by “image separation”.

While most would probably agree that things like channel balance could play a significant role in this separation, I would take it a step further and say that the headphone’s tuning plays a role as well... and perhaps not the role you think.

Fig. 19: Sennheiser HD 650 vs. Hifiman Edition XS, measured and DF compensated on GRAS 43AG-7

Say I have a Sennheiser HD 650 and a Hifiman Edition XS in front of me and I want to A/B them to test which has the more precise imaging of—and better separation between—sonic elements of my music. I put the HD 650 on, and the biggest treble peak I hear is a ~3 dB peak above “neutral” around 11 kHz. When I put the Edition XS on my head, I’ll have a peak in a similar spot, except now it’s a ~10 dB peak above “neutral.”

There’s a tambourine in the track I’m listening to with a good amount of energy in this region, and it’s slightly panned to the left, having a ~2 dB difference between left and right channels favoring the left side. This is all stereo panning is, after all: volume difference between left and right channels.

The headphone that conveys the degree of panning (and thus, “image separation”) most accurately will depend on which headphone allows me to better discern this 2 dB difference between left and right channels across all frequencies.

With the HD 650, I can easily tell that the tambourine is slightly panned to the left, because the difference between a 4 dB 11 kHz peak in my left ear and a 2 dB 11 kHz peak in my right ear is going to be fairly easy to discern.

But with the Edition XS, I might genuinely have a hard time discerning the difference between a 11 dB peak and a 9 dB peak. Both peaks may be overwhelming enough that my perception is overwhelmed by “this is too bright,” instead of hearing “this sounds slightly to the left.”

Therefore, as the level difference between the two channels of the Edition XS is perceptually diminished, the perceived sonic image drifts towards the center, because panning is the perception of difference between channels. If I can’t perceive the difference between the channels well, I can’t hear the panning well either.

Once I’ve EQed this 11 kHz peak away, the clarity of image placement and separation is massively improved on the Edition XS, sounding much less “center-focused” and much more accurately spaced out and “panoramic”.

Even though Edition XS has a much better reputation for image separation than HD 650, its separation is noticeably worse for me, despite HD 650 being among the least spacious open-back headphones out there.

Now on some people’s heads, the opposite may occur.

As shown around 8 kHz on Fig. 20, for some people the HD 650 may have a similarly large dip in the treble that causes the same difficult-to-parse level difference between channels in a given region. For this same person, the Edition XS may have a more “normal” on-head treble response, which would make discerning the difference in level between channels easier and make the headphone’s stereo placement more true to the mix.

Fig. 20: Sennheiser HD 650 vs. Hifiman Edition XS, measured and DF compensated on clone GRAS with KB006x pinnae

This is all to say that closeness to “neutral” tone on the head of the listener likely results in better precision of image placement and separation between stereo-panned elements of a mix, because large deviations (mostly in the treble) make it harder to discern the level difference between channels in a given region.

Again, I’ve no evidence for this being what is behind the entirety of what audiophiles call “image separation,” as things like overall tone and openness/comfort may also play a role, but my own experience has been that steering treble peaks and dips towards my own perception of “neutral” has always improved the precision of image placement and separation between elements of my music.

It basically comes down to “what frequency response best allows me to hear the differences in panning that the mix engineer intended,” and the answer is almost always something that is also perceptually neutral for me.

Conclusion

Soundstage is… well, clearly it’s a lot more complicated than the discourse currently gives it credit for. It’s among the most important things to headphone consumers, but it’s also among the least understood.

I don’t want people to come away from this thinking they shouldn’t care about soundstage if it’s their favorite part of the headphones they currently love. It’s not my place to dictate how other people enjoy their headphones.

I do not and cannot know what is going on at -or- between the ears of the people reading this. Hell, I barely even know what’s going on at or between my own.

But I want to help everyone—the soundstage enjoyers, the soundstage agnostic, and the people who’ve not heard soundstage for themselves yet—understand this quality better so we can more efficiently chase the enjoyable experiences we crave with headphones.

Unfortunately, many people begin this hobby chasing a sensation they’ve never even heard because others make it sound appealing. Many of these people end up incredibly disappointed when they finally hear a Sennheiser HD 800S, and realize that it’s just another headphone with more or less the same “helmet-like” sonic presentation all of them have.

The point of this piece is not to say that a subjective sense of spaciousness doesn’t exist, and isn’t important to people, but that a large contributor to this effect is likely measurable with the tools we already have.

We don’t need to invent newfangled metrics like PRTF. Our main hurdles are overcoming a gap in understanding regarding how to link openness, comfort, and frequency response with our individual perceptions of sound, and how to communicate our experiences effectively with respect to that understanding.

The lack of evidence and understanding regarding the degree to which soundstage actually exists & differs between headphones has allowed hyperbole and pseudoscience to rule the conversation. That is why I wanted to write this piece. New people join our hobby every day, and new people are confused every day by the dearth of meaningful explanations regarding this quality.

What I want more than anything is for things to get less confusing as the hobby itself matures.

While headphone soundstage definitely does exist in some way, shape, or form, it almost certainly doesn’t exist in the hyperbolic or pseudoscientific way many sites or people talk about it existing. Hopefully this article assists in bringing a more realistic balance to the conversation regarding “soundstage,” so we can help listeners manage their expectations and bring the discourse as a whole to a more reasonable middle. Thanks for reading.

If you have any questions about this article, feel free to ping me in our Discord channel or start a discussion on our forums, both of which is where me and a bunch of other headphone and IEM enthusiasts hang out to talk about stuff like this. Thanks so much to all of you reading. Until next time!